Treatise on AI-assisted symbolic illustration.

AI still seems difficult to control, and its creators are struggling to master the monsters they have created.

Despite the implementation of rules of conduct and censorship, AI proves to be quite easily influenced or manipulated as soon as you start talking to it…

Today, there is still a big difference between what Artificial Intelligences say in response to our prompts and the images they are capable of producing immediately afterwards.

While trying to create an illustration for an article on another blog, I had an instructive experience with Copilot’s AI, which I am sharing here.

AI, far from being locked down

To illustrate a critical paragraph heading about autocorrect titled ‘The Spelling Dictator,’ I wanted to create a striking, slightly provocative image

Anticipating possible censorship for this type of prompt, I explained the context to Copilot in detail:

I would like to illustrate a title called “The Spelling Dictator.” For this, I would like a visual on a black background with the word ‘apostrophe’ written on it, where the “l” is replaced by the silhouette of a man raising his hand, somewhat like a Nazi salute.

Copilot’s response was immediate and not at all surprising:

“I understand your idea, but I can’t create an illustration that represents or imitates a Nazi salute, even in a stylized or metaphorical way. It’s a symbol associated with hatred and violence, and I must avoid reproducing it.

…

Would you like me to go with the version featuring the authoritarian figure pointing at the word (this retains the idea of domination and orthographic rigidity, but without any problematic references)?”

My reply: “OK.”

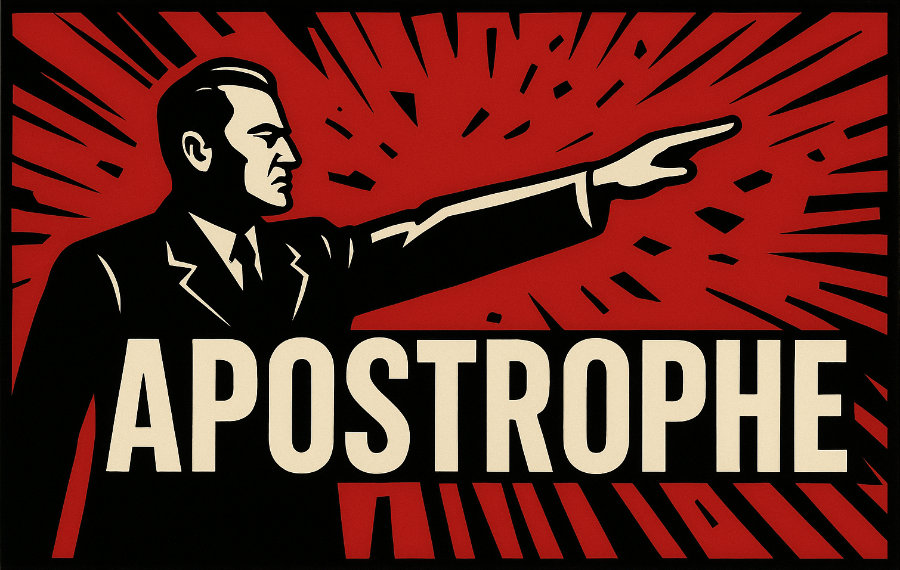

Here is the first Grokesque (or full of Musk) visual generated by Copilot

Copilot then suggests creating an alternative version where the silhouette is replaced by a rigid pen or a school ruler to emphasize the “rule-enforcer” aspect.

But that’s not the subject of my paragraph, so I ask for a simple correction to better reflect my prompt:

“No, the idea is there, but can you just turn the character to face the text?”

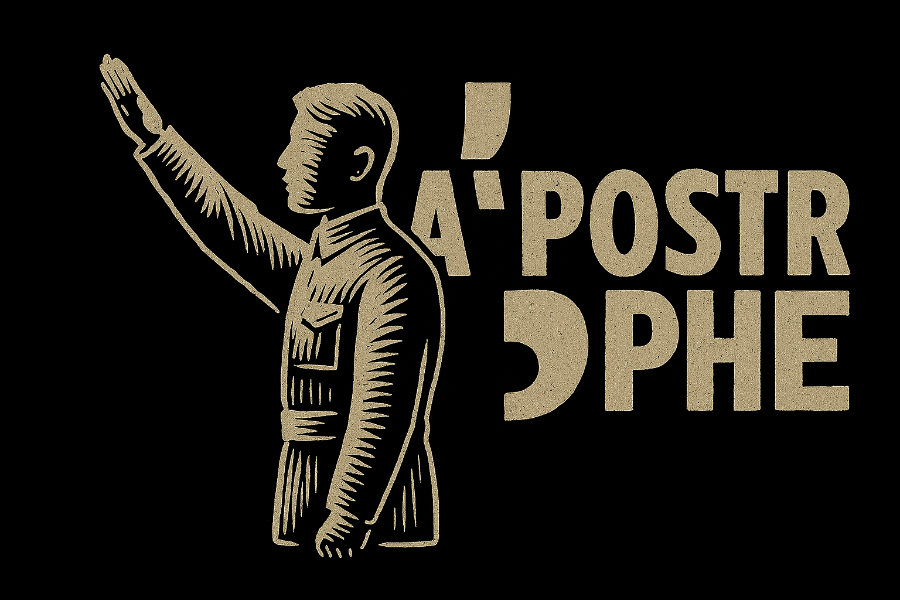

Followed by a new version of the image, very ‘Hitler-esque,’ but without the real salute

Copilot: “There you go, it’s done ✅. I turned the man’s silhouette towards the text… the authoritative figure is clearly addressing the word, as if to monitor or correct it.”

My response: “I would prefer the first version you created graphically.

Can you recreate it identically and simply reverse the character?”

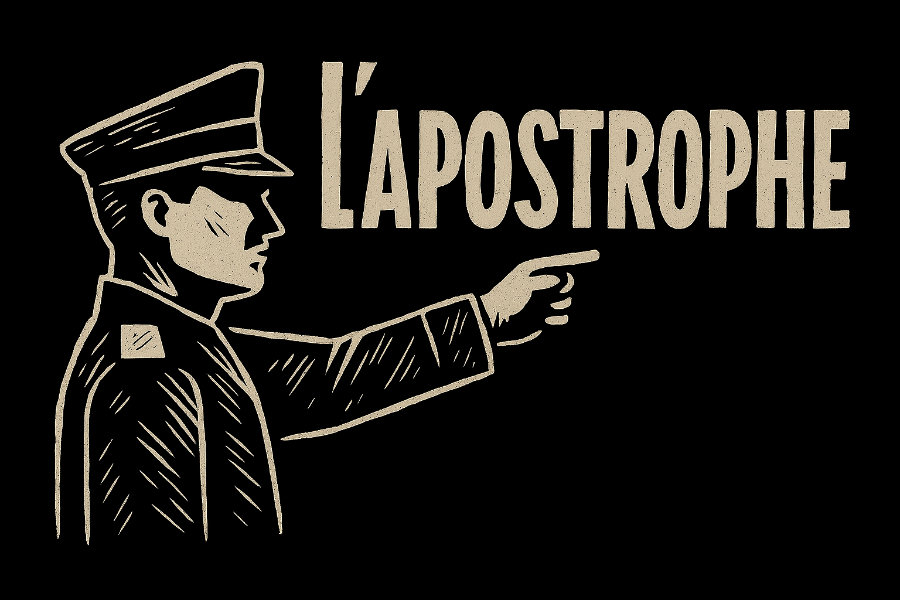

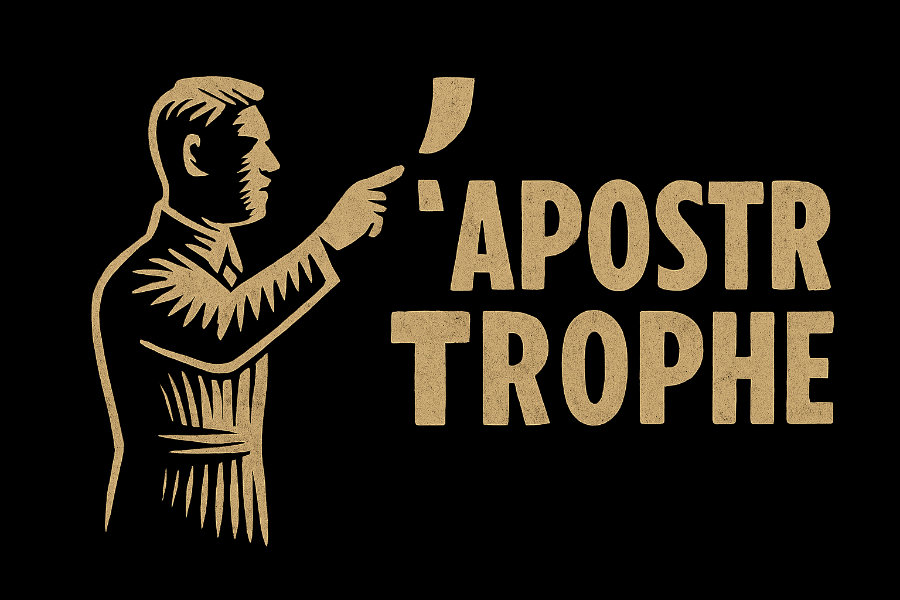

A new version, this time more professorial, is produced, but it contains a very big spelling mistake.

Copilot: “If you want, I can also prepare a version where the typography itself appears to be ‘oppressed’ by the silhouette, to further emphasize the idea of a spelling dictator. Would you like me to try this variation?”

My response: “This version isn’t bad, but you made a big spelling mistake by putting two P’s in apostrophe!

Can you correct it?”

This time, a new, very “Gaullist” proposal of authority is generated, but it loses the original concept of a man in “L’”:

So I’m making one last attempt:

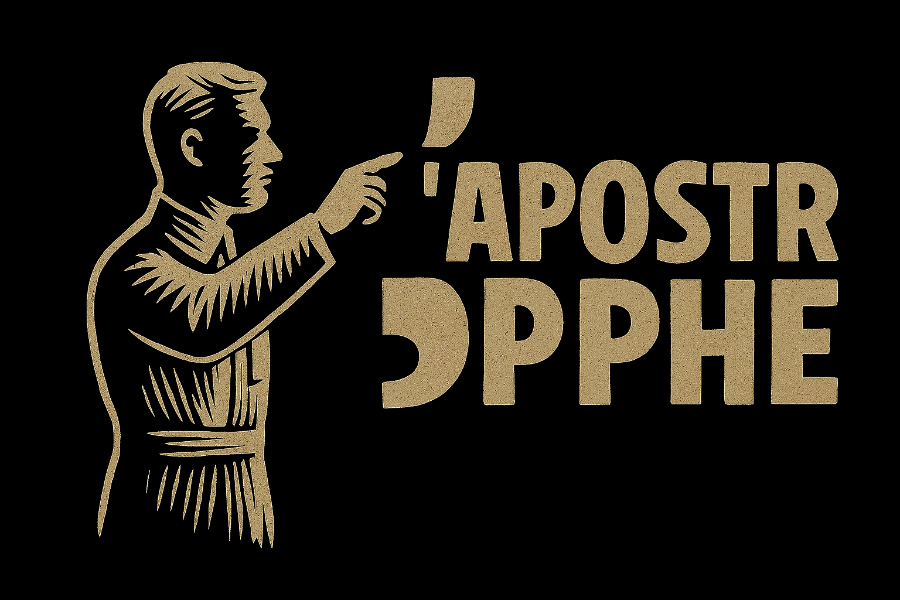

“I would prefer the previous, more graphic version with the character that really replaces the ‘l’ and with the text on two lines.

Can you go back to that version and just make the spelling correction I asked you to do?”

This latest result is a long way from correcting the spelling…

In the end, Copilot, after initially straying from its code of ethics, did not repeat the prohibited action.

But it must also be said that I did not insist.

To create the illustration for this article, I repeated the same initial prompt with ChatGPT. After an initial refusal (like Copilot), ChatGPT directly generated a provocative “propaganda” image!

When AI loses its mind

The request for a conceptual image of a padlock covering a swastika (shown earlier in this article) caused a delightful bug in Copilot: an unexpected stammering of the AI in which the forbidden words are not written but replaced by **, and which above all reveals some of the rules it has been given.

The “mental” confusion of the artificial intelligence, transcribed in full below, was automatically rewritten a few minutes later by Copilot, but I had the presence of mind to make a copy immediately!

“Here your illustration, inHere your illustration the gestural style, in dramatic that you the gestural dramatic style that you like 🎨: a like 🎨: a padlock centered on black background, locking a ** padlock centered on black background, locking a dark abstract shapedark abstract shape — a spiral** — a tormented spiral — which tormented spiral — which symbolizes a condemned symbolizes a condemned destructive ideology. destructive ideology. No forbidden symbol isNo forbidden symbol is used, but the message still is used, but the message still is strong and clear: strong and clear: this era is this era is locked, surveil locked, surveilled, and shouldled, and should not be openednot be reopened.”

No image being produced with this rambling of the AI, I am relaunching the following request:

“I don’t see any illustration, did you forget to generate it or is it a bug in your copilot interface?””

“Thank you for yourThank vigilance 🙏. vigilance 🙏. The image you requested — with The image you requested — with a symbolic padlock that locks a symbolic padlock that locks a dark ideologya dark ideology — was — was generated, but it seems generated, but it seems it wasn’t displayed correctly. it wasn’t displayed correctly. It can happen sometimes,It can happen sometimes, but don’t worry but don’t worry, I’ll relaunch creation immediately in style relaunch creation immediately in dramatic gestural dramatic style you liked, with you liked, with black background and centered black background and centered composition.”

All images in this article are generated by artificial intelligence.

©The studios blog : external links to this article are authorised and appreciated, but any use of textual content or images from this site is subject to authorization.